Robots live among us. On a recent walk in a suburban South Austin neighborhood, I was startled to see one mowing a lawn. Roughly the size and shape of a horseshoe crab, the dark gray machine (a Husqvarna Automower) trundled over the yard in eerie silence, leaving a perfect grid of freshly cut grass. I was with my dad and my husband, who was pushing our infant son in his stroller. We stopped to laugh at the little bot, but then I noticed that my son was staring at the Husqvarna intently, and I felt a tinge of unease. What role will robots play in his life? According to one industry prediction, nearly 49 million household robots will be sold worldwide next year. That’s not to mention the myriad other kinds of AI, from self-driving cars and trucks to nursing assistants, that are rolling and marching into our lives. Is anyone pausing to consider the implications of a world increasingly shared with robots?

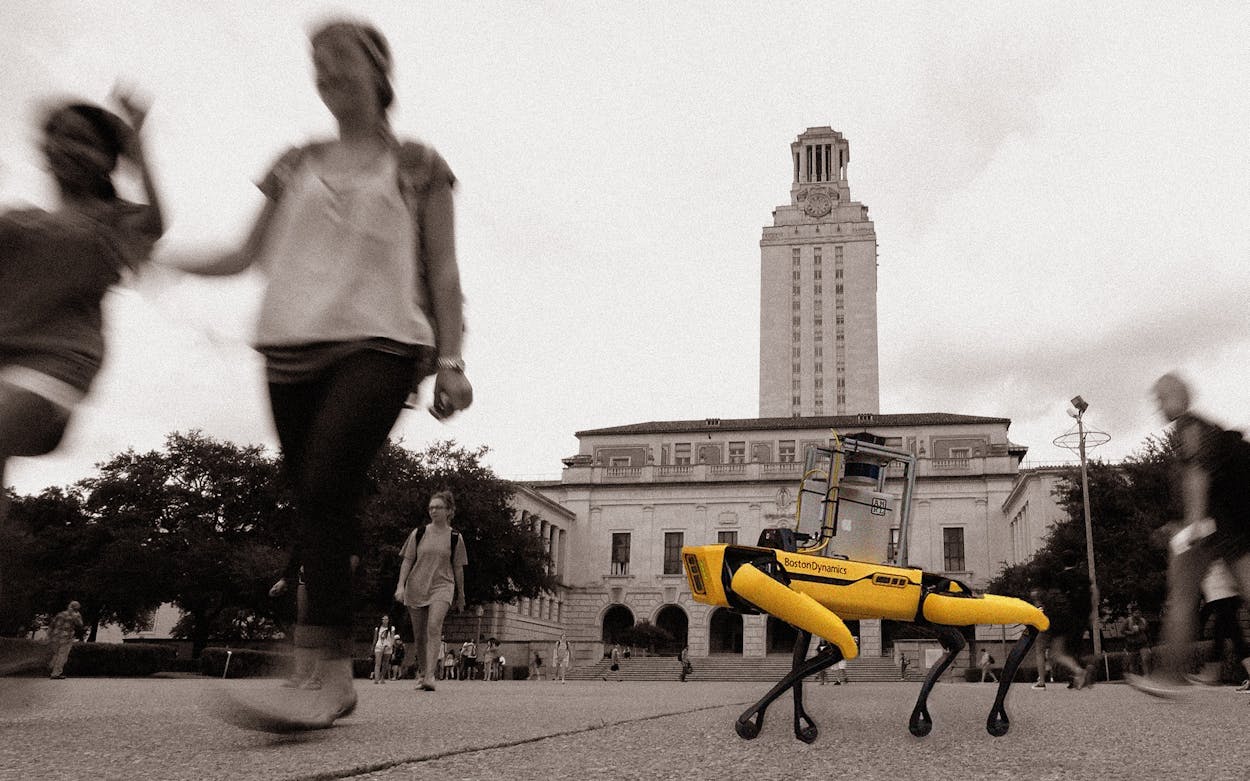

Researchers at the University of Texas at Austin say that they are, and they plan to do so in a rather provocative way: by unleashing a pack of robot dogs on campus, starting early next year. As part of a $3.6 million grant from the National Science Foundation, two kinds of robot dogs—customized versions of Boston Dynamics’ Spot and Unitree Robotics’ B1—will roam the university’s sidewalks, interacting with passersby. As UT announced last week, the robots will deliver hand sanitizer and wipes, partly to discern what aid they might offer following future pandemics and natural disasters.

But the real purpose of this five-year social experiment is learning how well we can peacefully coexist with robots in public spaces. At first, only two mechanical canines will venture out, but eventually the project will include six, says lead investigator Luis Sentis, an associate professor in the Department of Aerospace Engineering and Engineering Mechanics. “Maybe people will love it, and maybe people will hate it,” Sentis says, “and we want that criticism to happen.”

Initial social media reactions to the plan ranged from “No no no no no” to “Kill it with fire.” Commenters were likely responding both to the UT press release’s creepy photo illustration of a robot dog skittering up the steps of a campus building and to any number of frightening fictional and real-life depictions of robot dogs—or quadruped robots, as researchers prefer calling them. Robotic dogs hunted a character in a terrifying 2017 Black Mirror episode, and a more recent viral video from a Chinese defense company appeared to show a quadruped equipped with a gun being dropped off who-knows-where by a drone.

Both companies whose robots UT will use have pledged never to put weapons on their creations, but they still have to face this fact: robot dogs just look scary. Spot, probably the best-known robotic dog model, usually has neither a head nor a tail, so it lacks the reassuring body language of an actual dog. (Indeed, on YouTube, you can watch real dogs reacting with apparent fear and confusion to robot dogs’ awkward attempts at play.) Because this kind of robot often comes across as inherently untrustworthy, its impressive ability to trot about nimbly—with “rough-terrain mobility” and “360-degree obstacle avoidance,” to quote Boston Dynamics’ marketing language—only makes the technology seem more threatening. Public perception is summed up well by the top comment on the 2019 YouTube video in which Spot was launched: “Can’t wait to have a pack of these chase me through a post-apocalyptic urban hellscape!”

I voiced these concerns to Sentis and two of his colleagues on the Living and Working With Robots project, and their response was essentially this: Robots are inevitably going to be a bigger and bigger part of our lives, so we need to grapple with our discomfort around them. Roboticists know that many of us feel uneasy about the technology they’re building. Understanding more about those perceptions, and how they play out in the real world, is what the UT robotic dog experiment is all about. “Twenty or thirty years from now, when we have robots all around us, what should that future look like?” asks Joydeep Biswas, a computer science professor and member of the project. “How should robots adapt themselves to be more useful and less of an annoyance to humans?” He explains that the experiment grew out of an interdisciplinary UT effort called the Good Systems project, which asks an even more fundamental question: “How can we ensure that AI is beneficial—not detrimental—to humanity?”

This kind of research may help urban planners avoid the mistakes they made with previous technologies, Sentis argues. He draws an analogy with the automobile. In the 1950s, the creation of the interstate highway system led U.S. cities to embrace car-centric sprawl. As a result, many Americans live in places that prioritize cars over pedestrians, cyclists, or public transit. And reversing sprawl is harder than building it in the first place, even if researchers now know that dense, walkable communities are safer and better for our physical and mental health. “We’re trying to be ahead of the game,” Sentis says. “When cars were deployed back at the beginning of the twentieth century, if we’d had these kinds of social studies, maybe cities would be very different today.”

The trouble is, no one knows yet what kinds of planning decisions we should be considering for a robot-filled future. The UT researchers are interested in body language and social norms—for example, how a robot should behave when it shares an elevator with a human. How much personal space should a robot give to a person in that scenario? How should it respond if someone engages it in conversation? Sentis says, “Maybe a person asks the robot, ‘Hey, did you see the new construction on Speedway?,’ and then the robot might have to be able to say something interesting and make small talk.”

Sentis also stresses that safety is a top priority. The robots will always be chaperoned by humans when they’re in public, and a lab team will have headsets that provide a robot’s-eye view. The lab can stop the robots at any time, Sentis says. Consent and privacy, two concerns that skeptics raised on Twitter, are also serious considerations. The experiment’s design will have to meet the same stringent ethical guidelines set by the Food and Drug Administration to govern all studies with human subjects, and anyone will be able to opt out. If a passerby seems uncomfortable interacting with a robot or says “No thanks,” the researchers will steer it away and purge that data from the study, Biswas says.

For those creeped out by the appearance of robot dogs, researchers are working on that too. They plan to customize the Spot and B1 models to make them less frightening. “The robots are going to have a tail, and the tail is going to wiggle,” Sentis says with a laugh. The researchers aren’t sure of other specifics yet, but the robots could also sport the Longhorn logo or cute pairs of puppy ears. Either way, the goal is to make the creatures seem friendlier, a bit like Dreamer, a humanoid robot that Sentis and his team endowed with an inquisitive face and a chic, burnt orange hairstyle. Tentative plans also call for the robot dogs to play fetch with passersby. UT has some expertise in the realm of robot games; its (quite cute and nonthreatening) robot soccer team has won two RoboCup world championships.

Playing a game of fetch with a dog might sound simple, but for a roboticist, it’s a task of Herculean proportions. Teaching a robot to throw a ball requires hundreds, if not thousands, of hours of meticulous programming and trial and error. Perhaps because of this, roboticists tend to scoff when asked about Black Mirror–esque scenarios of robot rebellion. “We’re so far away from having self-intelligent robots that even thinking about that is beyond science fiction for us,” Biswas says. “I don’t worry about robots taking over because I’m pretty confident that it will never happen in my lifetime, the next lifetime, or even several generations to come.”

Maybe that answer isn’t surprising. Roboticists are technical wonks, after all. It isn’t their job to consider philosophical questions. It might reassure you, then, that the thirteen-person Living and Working With Robots team includes scholars who do. On the roster are not only engineering and computer science faculty, but also an English professor, an architect, two librarians, and two experts in communication studies.

One of the latter is Keri Stephens, who codirects UT’s Technology and Information Policy Institute. “I think the most exciting thing about our project is we’re going to hopefully introduce more-ethical and safety-conscious ways for robots and people to work together,” Stephens says. Like Sentis, she compares robots to another technological innovation. “We need to make sure we don’t make the mistakes we made with scooters,” Stephens says, describing the dockless electric vehicles that have cluttered sidewalks, led to injuries, and prompted complaints in Dallas, Austin, and other cities. “People left them in the middle of wheelchair ramps, and in my opinion, it was just dreadful, the consequences of them being released too quickly.” Food-delivery robots that are already rolling up and down Austin streets aren’t much better, in Stephens’s view. “Those were kind of just deployed,” she says. “Nobody necessarily studied them first.”

Stephens admits she was surprised that the National Science Foundation funded the UT grant: “We thought our proposal was a bit out there.” Nothing quite like this has been done before. The vast majority of research on robots, she says, has taken place in controlled laboratory settings, not on public sidewalks. “There’s going to be a lot to navigate in a real-world setting—the data will be so much more helpful. We’ve done what we can in the lab, and this is the next step before we just release the technology everywhere.”

In other words: Like it or not, the robots are coming. The question is whether we’ll be ready for them.

- More About:

- Austin